Nilay Yilmaz

Ph.D. Student, School of Computing & Augmented Intelligence, Arizona State University.

I am a Ph.D. student at Arizona State University, working under the supervision of Professor Yezhou Yang.

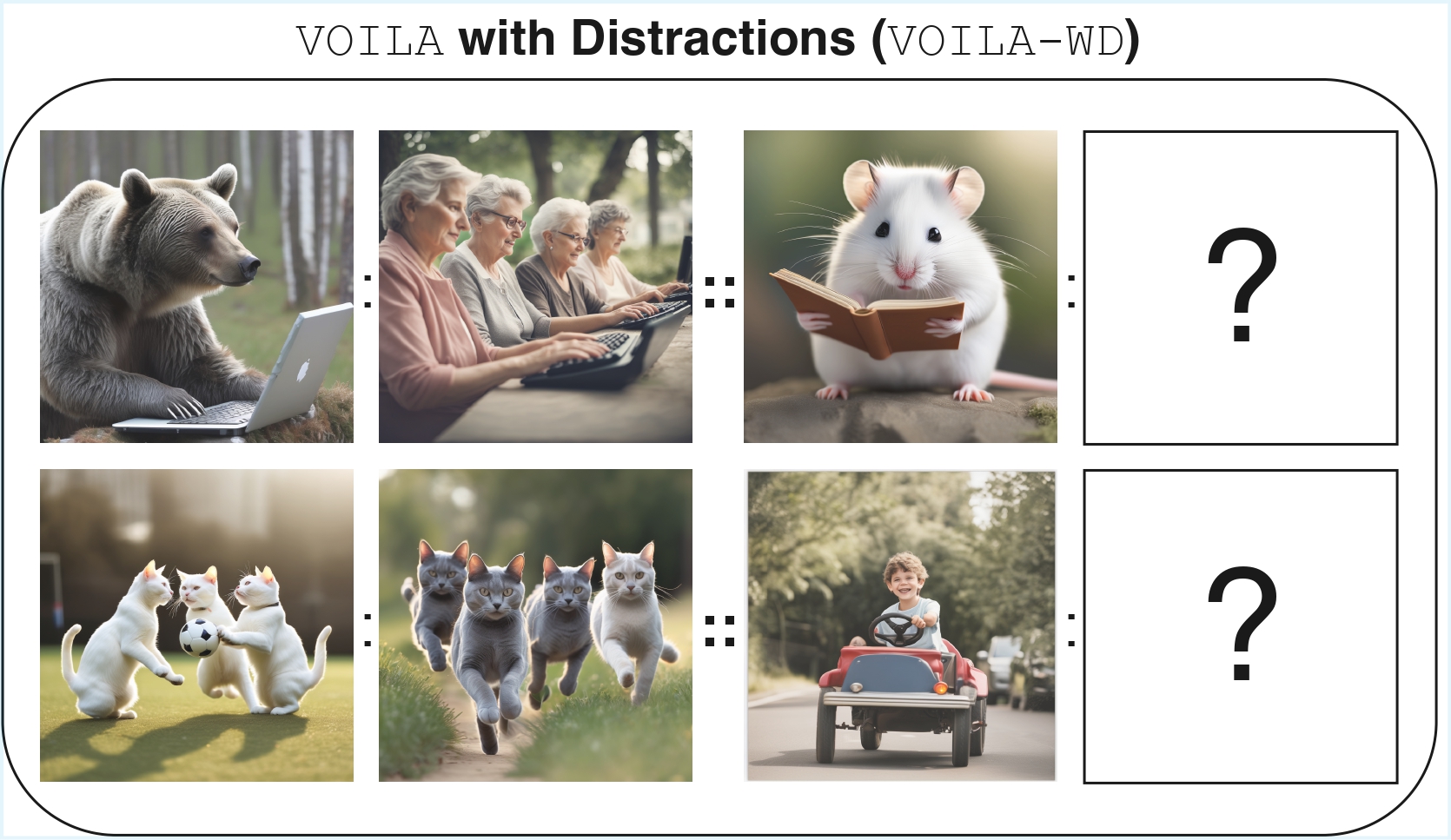

My research centers on evaluating the multimodal abstract reasoning capabilities of vision-language models (VLMs) and large language models (LLMs), with a particular emphasis on spatial visualization and visual analogy problems. These cognitively demanding tasks reflect core aspects of human intelligence, and my goal is to advance our understanding of human cognition by developing models that can mirror such abilities in artificial systems.

I have extensive experience in developing dynamic dataset creation pipelines that include 3D scene construction grounded in physical principles and modular framework structures for flexible task generation. I believe that evaluating model capabilities should go beyond static benchmarks and limited multiple-choice evaluation formats, which fail to reflect a model’s true reasoning ability. Instead, evaluation must be open-ended, comprehensive, and human-aligned to uncover not only what a model gets wrong, but why and how that differs from human reasoning patterns.

In addition, I explore parameter-efficient fine-tuning and preference-based reinforcement learning as strategies to enhance the reasoning and problem-solving abilities of multimodal foundation models in complex, abstract domains.

News

| Dec 10, 2025 | ✨ Released Unwritten Benchmark - a new challenge designed to probe this abstract perceptual and cognitive ability. Check out our paper and page! |

|---|---|

| Nov 15, 2025 | ✨ Released MentalBlackboard - an open-ended spatial visualization benchmark. Check out our paper and page! |

| Jan 15, 2025 | 🚀 VOILA is accepted at ICLR 2025! |